In the first post of this series, we discussed a few Secure Software Development Lifecycle (SSDLC) metrics that I personally find very interesting. The four metrics are:

- The number of qualified defects found per quarter

- The number of qualified defects fixed per quarter

- The difference between [1] and [2], that is, inflow vs outflow

- The overall number of open qualified security defects

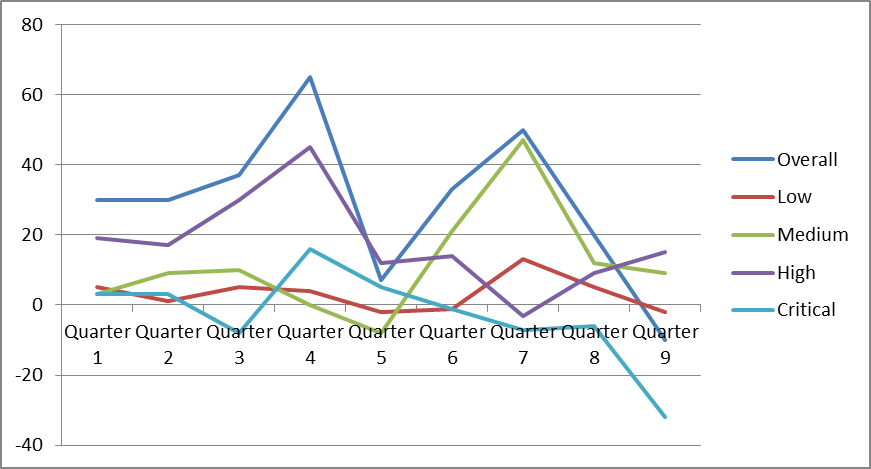

In this post, I will share some inflow / outflow metrics, and their development over time. This data is taken from the same organization and product set discussed in the first post of this series, and it has been anonymized, randomized, and transformed into a relative scale to protect confidential information without affecting the trends that are typically seen when starting a new SSDLC program. All percentages in the graph are relative to the results of the 20 Quarters before the SSDLC program was started.

Inflow vs Outflow

The inflow / outflow metric gives a good indication on how successful an organization is in dealing with newly found issues: can they qualify and address security defects fast, or are they overwhelmed by the influx of new issues, and just keep piling them onto a backlog?

This graph shows the difference between the number of incoming new defects and the number of defects that have been closed in the same time period. Like in the previous illustrations, the graph shows relative numbers (percentages) in relation to the results of the first 20 Quarters. Unfortunately, this makes the graph a little harder to read, because the percentages do not directly translate into the actual number of issues that have been added to the backlog. In this graph, big positive percentage numbers mean that work is added to the backlog. A negative percentage number (or a number close to 0) is desirable, because this means that the team is ahead of or at least keeping up with the influx of work.

This graph shows two peaks, which is a very common characteristic for organizations where multiple groups contribute to finding security problems, but only one group is fixing them. If we compare this to the two graphs we discussed in the previous post, we can explain the first peak by a large inflow of defects, which the developers are not yet prepared to handle. After about 12 months into the program, the engineers are starting to catch up. This is very common, and a good sign, because it reflects the learning and training that the developers have to go through to know how to deal with the findings, and then the developers applying these learnings and starting to catch up with incoming work. This first peak is usually always present when rolling out a new SSDLC program.

The second peak is very typical for an organization with dedicated QA teams or security pen testing teams. Once these teams have been ramped up, completed their training, and are fully operational, their reporting output picks up significantly – typically also after 12 months. Other than the R&D team (who may also report more defects against their code), they are usually not chartered to fix the issues they discovered. This leads to additional pressure on the developer team, and the developer team must adjust (again) to the higher influx of security issues. Once this adjustment is complete (Quarters 8 and 9), the organization reaches an ideal state of close to zero or negative inflow / outflow difference.

The graph also reveals how an organization is prioritizing work. In this case, the organization is rightly prioritizing work on critical security issues. However, we can also see that they are focusing on medium and low severity problems first, before addressing high severity issues. This may be justified, for instance if crucial resources who can deal with the high severity problems are not available as they are assigned to work on the critical severity defects, or if some of the higher severity defects are not manifesting as security vulnerabilities (e.g. due to defense in depth covering them), allowing delays to fix lower-severity problems that do lead to security vulnerabilities. This metric makes potential prioritization problems visible and actionable.

Edit: continue reading…